As I began integrating Jest into my Next JS project, I learned how powerful and user-friendly it is for creating dependable tests. This blog is a personal walkthrough of my testing journey — from installing Jest to writing first test cases, applying best practices, and understanding why testing is important.

Best Practices of FastAPI-Python

FastAPI is a modern, fast (high-performance) Python framework for building APIs. Its ease of use and powerful features make it an excellent choice for developers. However, to maximize its potential, it’s crucial to follow best practices. This blog outlines some essential tips to ensure your FastAPI application is secure, scalable, and maintainable.

1. Setting Up Your Project Structure

A well-organized project structure makes your application scalable and easier to maintain. Below is an example:

project-name/

├── app/

│ ├── main.py # Entry point for FastAPI app

│ ├── config.py # Configuration settings

│ ├── routes/ # All route definitions

│ │ ├── __init__.py # Makes routes a module

│ │ ├── user.py # User-related endpoints

│ │ ├── auth.py # Authentication-related endpoints

│ ├── models/ # Database models

│ │ ├── __init__.py # Makes models a module

│ │ ├── user.py # User model

│ ├── services/ # Business logic

│ │ ├── __init__.py # Makes services a module

│ │ ├── auth_service.py # Authentication logic

│ ├── static/ # Static files (CSS, JS, images)

│ ├── templates/ # HTML templates

│ ├── utils/ # Utility functions

│ │ ├── __init__.py # Makes utils a module

│ │ ├── helpers.py # Helper functions

├── tests/ # Unit and integration tests

├── .env # Environment variables

├── pyproject.toml # Poetry configuration

├── poetry.lock # lockfile for consistent builds

├── README.md # Documentation

2. Use Poetry.

Poetry is a dependency management tool that simplifies working with Python projects. Here’s why it’s a great fit for your FastAPI applications:

- Automatic Dependency Resolution: Poetry resolves dependencies and ensures there are no conflicts.

- Isolated Environments: Automatically creates and manages a virtual environment for your project.

- Lockfile Generation: Generates a

poetry.lockfile that ensures consistent dependency installations across different environments. - Ease of Use: Handles versioning, packaging, and publishing with simple commands.

3. Environment Variables

Proper configuration of environment variables is critical. Store sensitive information like API keys, database credentials, and secrets in a .env file. Use a library like python-dotenv to load these variables into your application:

poetry add python-dotenv

Example .env file:

DATABASE_URL=postgresql://user:password@localhost/db_name

SECRET_KEY=your_secret_key

AZURE_REGION=your_azure_region

AZURE_SPEECH_KEY=your_azure_key

4. Asynchronous Programming

FastAPI is designed for asynchronous programming, making it perfect for non-blocking I/O operations. Always use async def for endpoints and services where applicable.

Example:

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

class Item(BaseModel):

name: str

description: str

@app.post("/items/")

async def create_item(item: Item):

return {"message": f"Item {item.name} created successfully!"}

Leverage FastAPI’s Asynchronous Capabilities for request handling:

FastAPI is built on asynchronous programming principles. Use async def for non-blocking I/O operations such as: HTTP requests Database queries File I/O

Asynchronous HTTP Requests:

Example:

import httpx

@app.get("/external-api")

async def call_external_api():

async with httpx.AsyncClient() as client:

response = await client.get("https://api.example.com/data")

return response.json()

Consequences of Not Using asynchronous programming in FastAPI

-

Blocking I/O Operations When synchronous endpoints are used (i.e., with

definstead ofasync def), any I/O operation like a database query or an external API call will block the entire thread until it completes. This means that no other requests can be processed during this time, leading to a bottleneck.Example of a Blocking Call:

import requests

@app.get("/external-api")

def call_external_api():

response = requests.get("https://api.example.com/data")

return response.json()In the above example, while the

requests.getcall is waiting for a response, no other requests can be handled by that thread. If multiple clients make similar requests, the application may become unresponsive. -

Inefficient Resource Utilization FastAPI’s asynchronous nature is optimized for modern architectures. By avoiding

async def, your application loses the ability to utilize Python’sasyncioevent loop, resulting in inefficient use of CPU and memory resources.For example, instead of handling thousands of requests concurrently, the application might struggle to handle just a few hundred due to threads being locked by blocking calls.

-

Poor Scalability In high-traffic scenarios, synchronous programming severely limits scalability. With synchronous endpoints, each request ties up a thread, and the server can quickly run out of threads to handle additional requests, leading to delays or dropped connections.

-

Inconsistent Performance Synchronous endpoints can cause unpredictable delays for clients, especially if one slow request blocks the thread and prevents other requests from being processed. This inconsistency can degrade the overall user experience.

5. Exception Handling

FastAPI provides custom exception handling capabilities to manage errors gracefully.

Example of Custom Exception Handling:

from fastapi import HTTPException

@app.get("/resource/{item_id}")

async def read_item(item_id: int):

if item_id < 1:

raise HTTPException(status_code=400, detail="Invalid item ID")

return {"item_id": item_id}

You can also create a global exception handler:

from fastapi.responses import JSONResponse

@app.exception_handler(Exception)

async def global_exception_handler(request, exc):

return JSONResponse(

status_code=500,

content={"message": "An unexpected error occurred."},

)

6. Logging

Use Python’s built-in logging module for structured logging. Logging provides visibility into the inner workings of your application, making it easier to monitor, troubleshoot, and maintain your code.

Example:

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("name")

@app.on_event("startup")

async def startup_event():

logger.info("Application startup complete.")

Output:

INFO:name:Application startup complete.

7. Loading Data During Server Startup

FastAPI provides the lifespan context manager to define tasks during the startup and shutdown phases of your application. For instance, you can load data, establish database connections, or initialize services during the startup phase.

Example of Using lifespan:

from fastapi import FastAPI

from fastapi.lifespan import Lifespan

app = FastAPI(lifespan=Lifespan())

@app.on_event("startup")

async def startup_event():

print("Loading data during startup...")

# Example: Load configuration data from Azure Blob Storage

app.state.prompt = await get_prompt_from_blob()

print("Data loaded successfully!")

@app.on_event("shutdown")

async def shutdown_event():

print("Shutting down application...")

# Example: Cleanup resources

async def get_prompt_from_blob():

# Simulate fetching data from Azure Blob Storage

return "Prompt loaded from Blob Storage."

8. Secure Your Application

- Use HTTPS in Production: Ensure your app runs over HTTPS using tools like Nginx or a cloud provider.

- Avoid Hardcoding Secrets: Use environment variables for sensitive data.

Authentication and Authorization

For any web application, authentication and authorization are essential to ensure only authorized users can access certain resources.

-

OAuth2 with Password Flow: FastAPI supports OAuth2, which is often used in applications to authenticate users. You can integrate - OAuth2 providers like Google, GitHub, or Twitter.

-

JWT (JSON Web Tokens): Use JWT tokens for stateless authentication. After logging in, the user receives a token that should be included in the headers for future requests.

from fastapi import Depends, HTTPException, status

from fastapi.security import OAuth2PasswordBearer

from typing import Optional

oauth2_scheme = OAuth2PasswordBearer(tokenUrl="token")

def get_current_user(token: str = Depends(oauth2_scheme)):

# Validate the token and return the user

user = decode_jwt(token) # decode_jwt is a function you would define

if not user:

raise HTTPException(status_code=status.HTTP_401_UNAUTHORIZED, detail="Invalid credentials")

return user

9. Background Tasks

Offload long-running tasks to background processes using FastAPI’s BackgroundTasks.

Example:

from fastapi import BackgroundTasks

async def send_email(email: str):

# Simulate a long-running email operation

await asyncio.sleep(5)

print(f"Email sent to {email}")

@app.post("/send-email/")

async def trigger_email(background_tasks: BackgroundTasks, email: str):

background_tasks.add_task(send_email, email)

return {"message": "Email will be sent soon"}

10. Serving Static Files

Use fastapi.staticfiles to serve static files like CSS, JavaScript, or images.

Example:

from fastapi.staticfiles import StaticFiles

app.mount("/static", StaticFiles(directory="app/static"), name="static")

Access static files via /static/<file_name>.

11. Pydantic: Simplifying Input and Output Validation in Python

Pydantic is a powerful library for data validation and settings management in Python. It is especially useful for applications where structured data is required, such as in web APIs, configuration files, and more. By utilizing Python's type annotations, Pydantic allows developers to define models that handle input and output validation seamlessly.

Input Validation with Pydantic

When working with user input or API requests, it's crucial to validate that the data adheres to expected types and constraints. Pydantic helps by defining models with specific types and validation rules.

For example:

from pydantic import BaseModel, Field

class User(BaseModel):

name: str

age: int

email: str = Field(..., regex=r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$')

# Example usage

user_data = {

"name": "John Doe",

"age": 30,

"email": "[email protected]"

}

user = User(**user_data)

print(user)

In this example, Pydantic ensures that the name is a string, age is an integer, and the email matches the provided regular expression pattern. If any of these conditions aren't met, Pydantic raises a validation error, providing detailed feedback.

Output Validation with Pydantic

Pydantic not only helps validate incoming data but also ensures that the output is structured correctly. After performing operations (like data transformations or API responses), you can define Pydantic models to enforce the correct structure for the output.

class ResponseModel(BaseModel):

status: str

data: dict

# Example usage

response = ResponseModel(status="success", data={"message": "Operation completed successfully"})

print(response.json())

Here, ResponseModel validates that the status is a string and data is a dictionary before the response is returned.

Benefits of Using Pydantic

- Type Safety: Pydantic enforces strict typing, reducing the risk of bugs caused by incorrect data types.

- Automatic Data Validation: You can define complex validation rules using built-in validators, reducing manual error-checking.

- Error Handling: Validation errors are raised with detailed messages, making it easier to debug and correct input data.

- Fast: Pydantic is optimized for performance, ensuring that validation operations are efficient even with large datasets.

12. Practices When Using Global Variables in FastAPI

If you decide to use global variables in FastAPI, follow these best practices to mitigate the disadvantages:

- Use Thread-Safe Data Structures: Use asyncio.Lock, asyncio.Queue, or collections.defaultdict to manage access to global variables and avoid race conditions. -Scope Global Variables Appropriately : Limit the scope of global variables to specific use cases, such as application configuration or one-time initialization values.

- Consider Dependency Injection: Use FastAPI's dependency injection system to pass shared objects (e.g., database connections, configuration data) to endpoints instead of relying on global variables.

- Store Persistent Data Externally: Instead of global variables, use external storage solutions like databases, Redis, or message queues to manage shared data.

- Namespace Globals for Clarity: Use well-structured dictionaries or classes for global data to reduce clutter and prevent accidental overwrites.

- Use Startup and Shutdown Events: Initialize global variables in FastAPI’s startup event and clean them up in the shutdown event to manage their lifecycle explicitly.

Conclusion

By combining FastAPI with Poetry and following these best practices, you can build scalable, maintainable, and secure applications. From dependency management and environment configuration to exception handling and asynchronous programming, these techniques will ensure your project is ready for production.

Getting Started with Neo4j : Graph Theory and Data Connections

Hi there 👋

This blog introduces readers to the world of graph databases and explores the fundamentals of building graphs.

History of Graphs

To get started with graph databases, it's important to understand what graphs are. Don't you agree?

Let’s travel back in time around 1730’s.

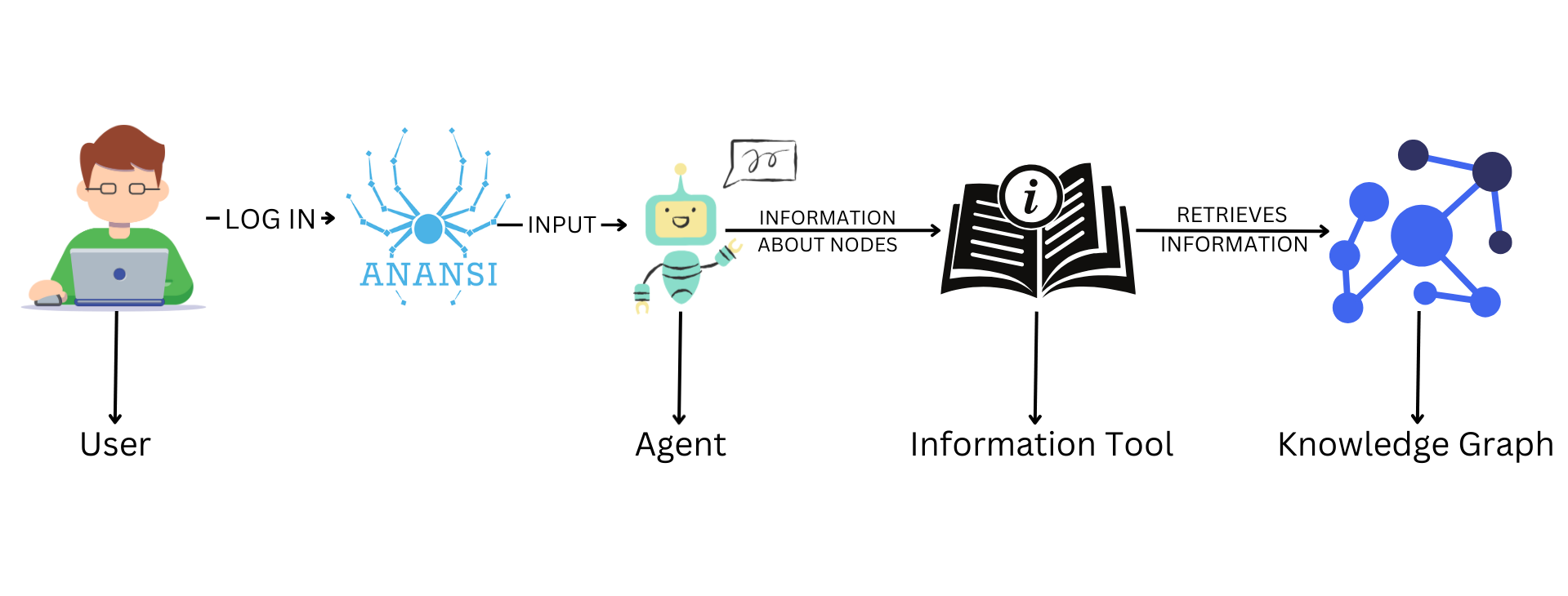

Building a Chatbot with RAG, Langchain, and Neo4j

A chatbot is a software application that uses AI to have conversations with users, helping them find information or answer questions. We built this chatbot using Retrieval-Augmented Generation (RAG) to improve its responses, Neo4j to store structured data, and Large Language Models (LLMs) to understand and generate natural language.

We created 2 types of Nodes/Labels, "Bank" and "Owner" and 1 type of relationship between them: "IS_OWNED_BY". The blog below lays out how we created a chatbot to query the relationship between the Node Types mentioned using RAG (Retrieval Augmented Generation) techniques.

Streamlining API Testing with Postman - A Beginner's Guide

Postman

Postman is a popular API development and testing tool that simplifies the process of working with APIs. It provides a user-friendly interface to send HTTP requests, test, and debug APIs.

How to generate code from Swagger/Open API Specifications

In modern software development, having well-documented APIs is crucial for collaboration and integration. Postman is a popular tool for testing and documenting APIs. Open API Specification is a widely accepted standard for defining RESTful APIs. In this blog post, we'll walk you through the process of converting a Postman collection into an OpenAPI specification and then using the OpenAPI Generator to create a JavaScript client from it.

There are 2 different ways to approach API development.

-

Design First: Postman -> Swagger/Open Api Spec -> Code

-

Code First: Code -> Postman Code -> Swagger

In this blog post, we will explore the 'Design First' approach to API development.

Design First Vs Code First - High level differences

| Aspect | Design First Approach | Code First Approach |

|---|---|---|

| Initial Project Concept | Requires a clear understanding of project goals and requirements from the start. | Allows for a more flexible approach, where the initial project concept may not be well-defined. |

| Team Composition | Involves a cross-functional design team, including architects and designers. | Relies on a team of developers, testers, and domain experts who can adapt to changing requirements. |

| Starting Point | Begins with a detailed design and specification of the software system. | Starts by writing code to create a basic functional prototype or MVP. |

| Flexibility | May lead to more rigid development, with a focus on adhering to the initial design. | Offers greater adaptability to evolving requirements and allows for frequent changes. |

| Feedback Gathering | Feedback is primarily gathered during the design and specification phase. | Feedback is collected continuously throughout the development process based on the evolving codebase. |

| Documentation | Detailed design documentation is created before implementation. | Documentation is created as the design and specifications emerge during development. |

| Testing and Quality Assurance | Testing is performed against the well-defined design. | Testing is integrated throughout the development process to ensure code quality. |

| Stakeholder Involvement | Stakeholder involvement primarily during design and specification phases. | Continuous stakeholder involvement and feedback are encouraged throughout development. |

| Refactoring and Optimization | Design changes are costly and may require significant rework. | Code is refactored and optimized as needed to maintain quality and adapt to changing requirements. |

| Initial Deployment | Deployment may occur later in the development process. | Allows for continuous deployment of prototypes and updates to gather real-world feedback. |

| Ongoing Maintenance | Maintenance activities are largely predictable based on the initial design. | Ongoing maintenance is essential, with a focus on adapting to evolving needs and addressing emerging issues. |

Both approaches have their advantages and are suited to different project scenarios. The choice between "Design First" and "Code First" depends on the project's specific requirements, goals, and constraints.

Convert a Postman Collection to a Swagger Specification

There are several methods to convert a Postman collection to a Swagger Specification. In this section, we'll discuss some of the most popular ways to accomplish this task:

-

Swagger Editor: One of the most common methods is to use the Swagger Editor. This web-based tool provides a user-friendly interface for creating and editing Swagger specifications. It allows you to manually convert your Postman collection into a Swagger Specification by copy-pasting the relevant information.

-

Stoplight Studio: Another excellent option is Stoplight Studio, an integrated development environment for designing and documenting APIs. Stoplight Studio provides features that can help streamline the process of converting a Postman collection to Swagger.

-

npm Package: If you prefer a programmatic approach, you can explore various npm packages and libraries that are designed to automate the conversion process. These packages often provide command-line tools and scripts to convert your collection to a Swagger Specification, making the process more efficient.

Each of these methods has its advantages and is suitable for different use cases. You can choose the one that best fits your workflow and requirements.

However, for the purpose of this demo, we are showing how to perform the conversion through the npm Package.

Step 1: Installing the "postman-to-openapi" Package

The first step is to install the "postman-to-openapi" package, which allows you to convert Postman collections to OpenAPI specifications. To do this, open your terminal and run the following command:

npm i postman-to-openapi -g

-

npm: This is the Node Package Manager, a package manager for JavaScript that is commonly used to install and manage libraries and tools.

-

i: This is short for "install," and it's the npm command used to install packages.

-

postman-to-openapi: This is the name of the package you want to install.

-

-g: This flag stands for "global," and it tells npm to install the package globally on your system, making it available as a command-line tool that you can run from any directory.

This command uses the Node Package Manager (npm) to install the package globally on your system. Once installed, you'll be able to use the "postman-to-openapi" command as a command-line tool.

Step 2: Converting Postman Collections to OpenAPI Specifications

Now that you have "postman-to-openapi" installed, you can convert your Postman collections to OpenAPI specifications. Replace ~/Downloads/REST_API.postman_collection.json with the path to your Postman collection and specify where you want to save the resulting OpenAPI specification. Use the following command:

p2o ~/Downloads/REST_API.postman_collection.json -f ~/Downloads/open-api-result.yml

-

p2o: This is the command you use to run the postman-to-openapi tool. It is installed globally on your system using npm i postman-to-openapi -g, as mentioned earlier.

-

~/Downloads/REST_API.postman_collection.json: This is the path to your Postman collection file. You should replace this with the actual file path to your Postman collection that you want to convert.

-

-f: This flag is used to specify the output format of the converted OpenAPI specification.

-

~/Downloads/open-api-result.yml: This is the path where the resulting OpenAPI specification will be saved as a YAML file. You should replace this with the desired file path where you want to save the OpenAPI specification.

This command will take your Postman collection, process it, and save the resulting OpenAPI specification as a YAML file. Ensure that you provide the correct file paths.

Covert Open Api spec to Javascript code

Step 1: Configuring Your Java Environment

If you plan to generate code from your OpenAPI specification, you'll need a Java environment set up. Use the following commands to set the JAVA_HOME environment variable and update the PATH:

export JAVA_HOME=/usr/lib/jvm/java-1.11.0-openjdk-amd64

export PATH=$JAVA_HOME:$PATH

-

export JAVA_HOME=/usr/lib/jvm/java-1.11.0-openjdk-amd64: This command sets the JAVA_HOME environment variable to the specified directory, which is typically the root directory of a specific JDK version. In this case, it appears to be pointing to the root directory of OpenJDK 11.

-

export PATH=$JAVA_HOME:$PATH: This command appends the JAVA_HOME directory to the beginning of the PATH environment variable. The PATH variable is a list of directories where the system looks for executable files, so adding the JAVA_HOME directory to the PATH allows you to easily run Java-related commands and tools.

These commands are essential for Java development and ensure that the correct Java version is used in your development environment.

Step 2: Generating JavaScript Code from OpenAPI

To complete our journey, we'll use the OpenAPI Generator CLI to generate code from our OpenAPI specification. First, download the CLI JAR File file using wget:

wget https://repo1.maven.org/maven2/org/openapitools/openapi-generator-cli/7.0.1/openapi-generator-cli-7.0.1.jar -O openapi-generator-cli.jar

-

wget: This is the command-line tool used for downloading files from the internet.

-

https://repo1.maven.org/maven2/org/openapitools/openapi-generator-cli/7.0.1/openapi-generator-cli-7.0.1.jar: This is the URL of the JAR file you want to download.

-

-O openapi-generator-cli.jar: This part of the command specifies that you want to save the downloaded file with the name openapi-generator-cli.jar.

This command uses wget to download the CLI JAR file and renames it as openapi-generator-cli.jar.

Finally, run the OpenAPI Generator CLI to create code from your OpenAPI specification:

java -jar ./openapi-generator-cli.jar generate -i ./open-api-result.yml -g javascript -o ./nodejs_api_client

-

java -jar ./openapi-generator-cli.jar: This part of the command runs the Java JAR file (openapi-generator-cli.jar) using the java command. The JAR file is responsible for generating code from the OpenAPI specification.

-

generate: This is a command provided by the OpenAPI Generator CLI to instruct it to generate code based on the OpenAPI specification.

-

-i ./open-api-result.yml: This flag specifies the input OpenAPI specification file. In this case, it's using the file named open-api-result.yml.

-

-g javascript: This flag specifies the target generator. In this case, it's generating JavaScript code.

-

-o ./nodejs_api_client: This flag specifies the output directory where the generated code will be placed. In this case, the code will be generated in a directory called nodejs_api_client in the current working directory.

This command invokes the CLI JAR.

In summary, these are the steps guide you through converting a Postman collection into an OpenAPI specification and then generating code from that specification. It's a streamlined process that aids in API development and documentation. By following these commands and tools, you can enhance your development workflow and collaboration.

References

Understanding Financial Swaps

Swaps in the financial industry are complex derivative contracts that are essential in today's financial markets. With a focus on its key characteristics, uses, and roles, this abstract gives a general introduction to swaps.

Step by Step Guide - Running Batch Jobs in Azure Data Factory with Python

This tutorial walks you through creating and running an Azure Data Factory pipeline that runs an Azure Batch workload. A Python script runs on the Batch nodes

Steps to fetch Azure Resources in JSON format

Access Token from Azure Portal

To obtain an access token from Azure for use with APIs, you typically need to use App Registration under Identity for authenticating and authorizing your application.

Deploying Docker image to Azure App Service using GitHub Actions

Dockerfile

A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image.

GitHub Actions

GitHub Actions is a continuous integration and continuous delivery (CI/CD) platform that allows you to automate your build, test, and deployment pipeline. You can create workflows that build and test every pull request to your repository, or deploy merged pull requests to production.